Jensen Huang: AI We Can Trust Is Still Years Away

In today’s world, artificial intelligence is rapidly changing industries and our daily lives. It is now more important than ever to find trustworthy AI.

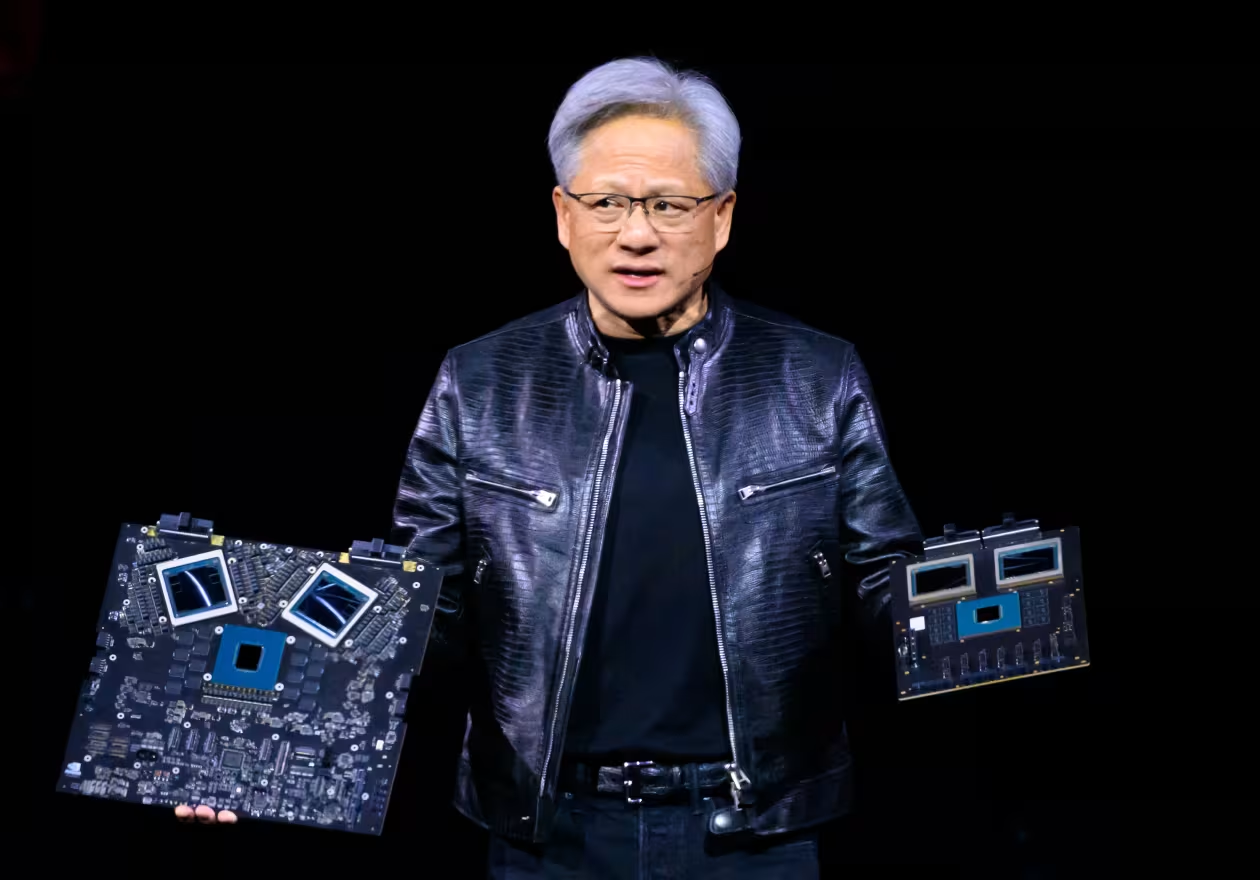

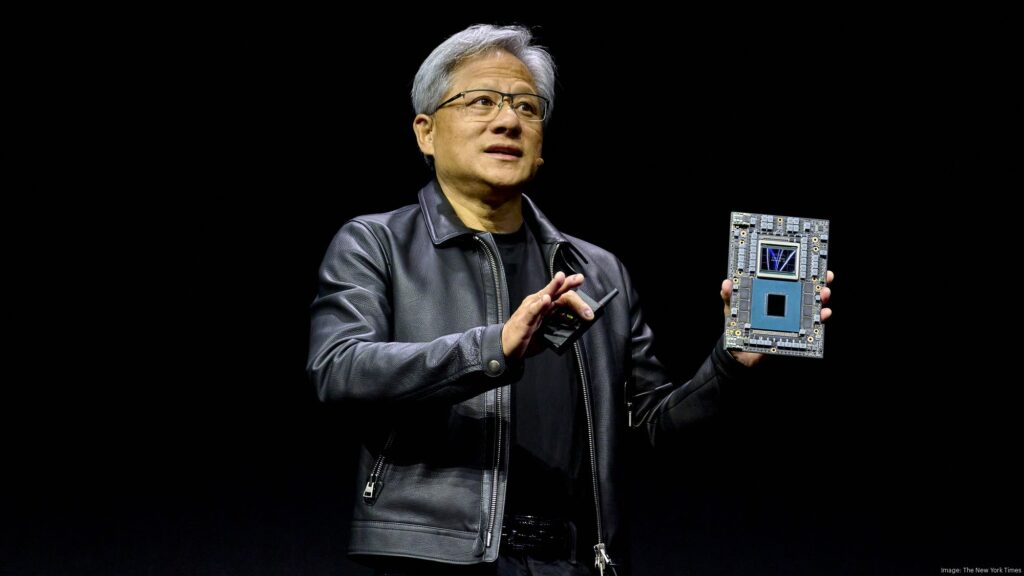

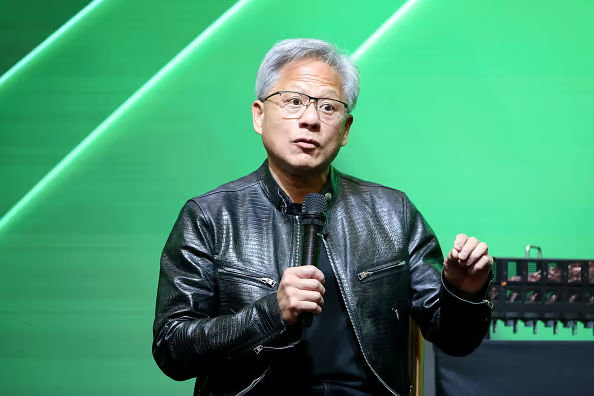

Enter Jensen Huang, the CEO of NVIDIA. His ideas on this important issue connect with both tech fans and critics. In a recent discussion, Huang laid bare his candid perspective.

We are making progress in AI development. However, reaching a level of trust that society expects is still years away. Join us as we explore Huang’s interesting ideas.

We will explore what it means to create a safe and reliable AI world. We will also consider the importance of ethical responsibility in this process. Buckle up—it’s going to be an enlightening ride!

Introduction to Jensen Huang and his views on AI trustworthiness

Jensen Huang is the co-founder and CEO of NVIDIA. He has greatly influenced the tech industry. His predictions about artificial intelligence are very strong. His insights often spark conversations that go beyond technical specs and delve into ethical considerations.

Recently, he emphasized a vital point: AI we can truly trust is still years away. This statement raises eyebrows and invites curiosity. What does it mean for the future of technology? In an age where AI influences almost every sector, understanding its limitations becomes essential.

Let’s dive deeper into Huang’s perspective on trustworthy AI and explore what stands between us and this promising frontier.

The current state of AI and its limitations

Artificial Intelligence has seen remarkable advancements in recent years. Yet, it remains a work in progress. Current systems can handle specific tasks with impressive speed and accuracy, but they lack true understanding.

For instance, AI excels at data analysis or pattern recognition. However, when faced with ambiguous situations or ethical dilemmas, its decision-making abilities falter. This limitation creates uncertainty about reliability.

Moreover, natural language processing tools often misinterpret context or sentiment. They may generate coherent text but struggle to grasp deeper meanings behind words.

Training models on biased data leads to unfair outcomes as well. It raises concerns about the fairness of decisions made by AI systems that affect lives and livelihoods.

These limitations highlight that while AI is powerful, it cannot yet replicate human judgment and intuition effectively. Trusting these technologies requires addressing their shortcomings head-on before we can fully embrace them in daily life.

Factors affecting the development of trustworthy AI

The journey toward trustworthy AI is not straightforward. Several factors play a critical role in shaping its development.

Firstly, data quality is paramount. Algorithms learn from data; if the input is biased or flawed, the output will be unreliable. Ensuring diverse and representative datasets can mitigate these issues.

Secondly, transparency in algorithms matters. Understanding how people make decisions fosters trust among users and stakeholders alike. When people can see behind the curtain, they’re more likely to embrace AI systems.

Regulatory frameworks also influence this landscape. Clear guidelines help create standards for ethical use and accountability in AI development.

Lastly, collaboration between technologists and ethicists drives innovation responsibly. By combining technical skills with ethical concerns, we can create safer AI solutions. These solutions will be reliable as they develop.

Challenges in achieving trustworthy AI

Achieving trustworthy AI is no simple feat. One major challenge lies in data bias. If the training data reflects societal biases, the AI can perpetuate or even amplify these issues.

Another hurdle is transparency. Many algorithms function as “black boxes,” which makes it difficult to understand how they make decisions. This obscurity breeds skepticism and distrust.

Accountability also poses a significant problem. When an AI system fails or causes harm, pinpointing responsibility becomes complex. Is it the developer, the user, or the technology itself?

Moreover, regulatory frameworks lag behind technological advancements. Current laws struggle to keep pace with rapid developments in AI.

Lastly, public perception plays a crucial role. Mistrust can stem from sensationalized media portrayals of AI mishaps, further complicating efforts to build reliable systems that society can embrace confidently.

Steps being taken to address these challenges

Developing trustworthy AI requires a collaborative approach. Researchers, technologists, and ethicists are joining forces to create frameworks that enhance transparency. This effort aims to demystify how AI systems make decisions.

Organizations are investing in rigorous testing processes. These include bias detection protocols and validation methods that ensure models function as intended across diverse scenarios.

Regulatory bodies are also stepping up their game. New guidelines focus on ethical standards for AI deployment, pushing companies to prioritize accountability and responsibility in their technologies.

Additionally, educational initiatives are becoming more prevalent. Programs that focus on ethics in technology help future innovators think carefully about the effects of their work.

Crowdsourcing public input is another promising tactic. Engaging communities allows developers to understand societal perspectives better and build systems with broader acceptance and trustworthiness in mind.

Potential timeline for when we can expect trustworthy AI

Predicting a timeline for trustworthy AI is challenging. Experts suggest that achieving this milestone may take another decade or more.

Current advancements are promising, yet widespread implementation remains far off. Many organizations are still grappling with biases and ethical concerns in AI systems.

Countries around the world are developing regulatory frameworks at various speeds. These regulations could play a crucial role in establishing standards for trustworthiness.

Some industry leaders believe we might see significant improvements within five years. However, true reliability may not arrive until 2035 or beyond.

The pace of innovation, along with ongoing research into responsible AI practices, will shape this timeline. Stakeholders must prioritize collaboration to accelerate progress toward trustworthy solutions.

Impact of trustworthy AI on society and industries

Trustworthy AI has the potential to revolutionize various sectors. In healthcare, for instance, accurate diagnostics and personalized treatment plans can enhance patient outcomes. When patients trust AI systems, they are more likely to engage with technology in their care.

In finance, reliable algorithms can detect fraud and manage investments more efficiently. Businesses that utilize trustworthy AI will gain a competitive edge while ensuring compliance with regulations.

Education could also see significant changes. Personalized learning experiences powered by dependable AI can cater to individual student needs, promoting better understanding and retention of information.

Moreover, industries like transportation stand to benefit from safer autonomous vehicles. Trustworthy systems would lead not only to reduced accidents but also to increased public acceptance of such technologies.

Ultimately, as society embraces these advancements, the demand for ethical considerations in developing trustworthy AI becomes paramount.

The importance of continued research and ethical considerations in the development of AI we can trust.

The journey toward creating trustworthy AI is complex and multifaceted. As Jensen Huang emphasizes, achieving a level of reliability that society can fully embrace requires ongoing effort and thoughtful consideration.

Research must continue to evolve, delving deep into the ethical implications of AI technology. This involves not just developing advanced algorithms but also ensuring designers create them with transparency and accountability in mind.

Moreover, collaboration among technologists, ethicists, policymakers, and industry leaders is crucial. By bringing diverse perspectives together, we can address the challenges head-on while fostering innovation.

Public trust hinges on consistent engagement with these issues. Only through committed research and open dialogue about ethics can we build an AI landscape where users feel secure.

Ultimately, as we navigate this transformative era of artificial intelligence, prioritizing trustworthiness will define our progress—and shape the future for generations to come.